It can be used to upload various file formats, including CSV. GeorgiosG HamidBee

.

In Chapter 1 we already built a simple data processing pipeline including tokenization and stop word removal. theapurva 365-Assist*

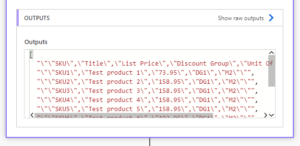

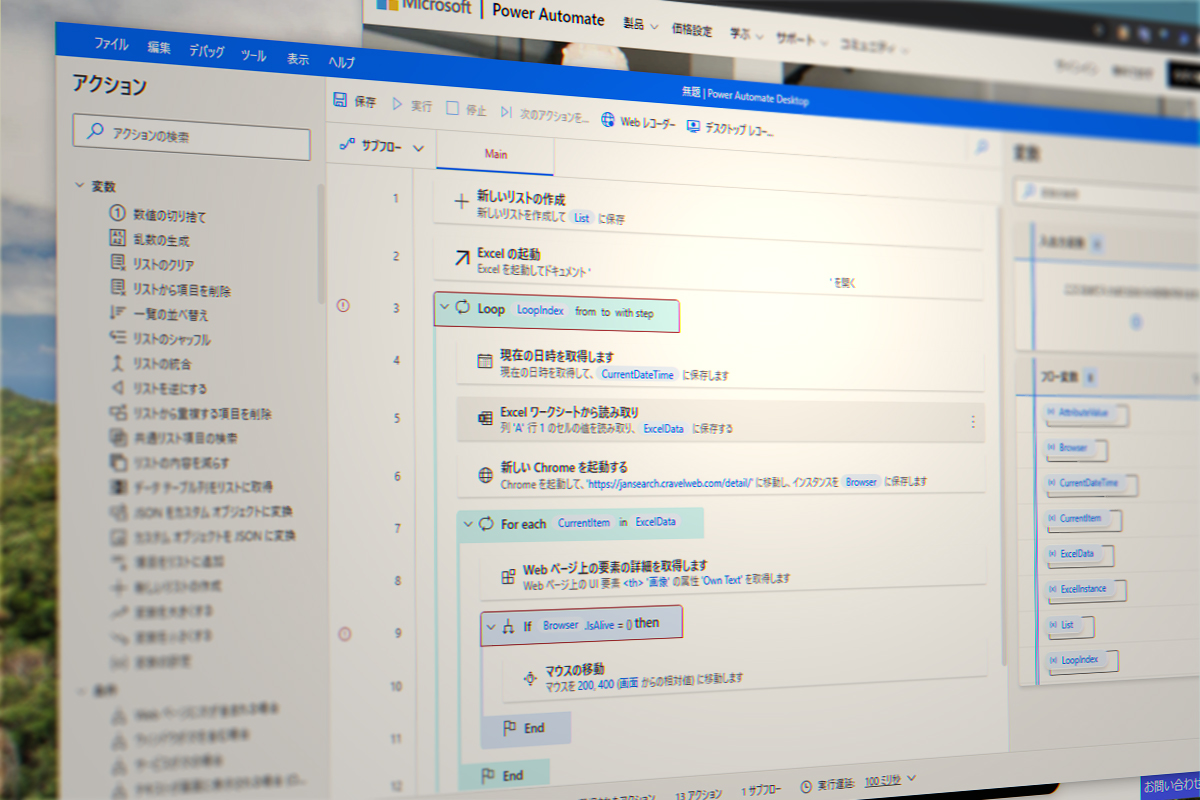

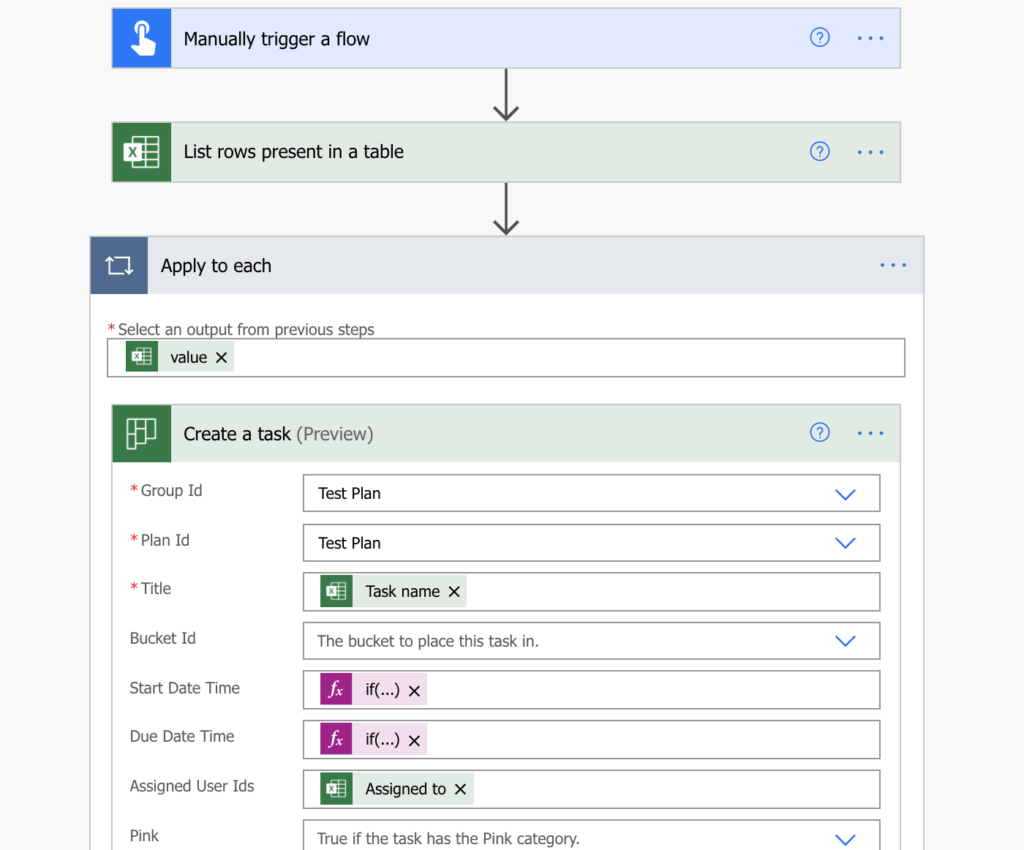

Power Automate David_MA The PSA and Azure SQL DB instances were already created (including tables for the data in the database). Power Virtual Agents So, The table will be cleared out and refreshed for every load, so select the, Its going to run every day at 5 AM EST, so verify that. Alternatively, you can use other tools if that is what you wish. Matren You can see below the screenshot of the completed package. For this, click the drop-down list and selectFlat File Source.

Before we start, we need a sample CSV and a target table in SQL Server.

Select the ResultSets Table1 from the dynamic content. Here, we specify the schedule.

Heres what it looks like after running the agent. Choose theActorstable. Then, select the SQL Server name and enter the necessary credentials. Then, in the file type, selectCSV files (*.csv). Today, the reason is still the same. We would like to send these amazing folks a big THANK YOU for their efforts. WebOpen Microsoft Power Automate, add a new flow, and name the flow.

Finally, we will use a cloud ETL tool toimport the CSV file to SQL Server. ETL Tools the most flexible for on-premise and cloud data of various types. Based on the data types and sizes, refer to table below on what to set for each column.

Finally, we will use a cloud ETL tool toimport the CSV file to SQL Server. ETL Tools the most flexible for on-premise and cloud data of various types. Based on the data types and sizes, refer to table below on what to set for each column.

With the CData ODBC Driver for SQL Server, you get live connectivity to SQL Server data within your Microsoft Power Automate workflows. Need sufficiently nuanced translation of whole thing.  The BULK INSERT command requires a few arguments to describe the layout of the CSV file and the location of file. And afterwards actor.csv. Why are trailing edge flaps used for land? You can define your own templets of the file with it: https://learn.microsoft.com/en-us/sql/t-sql/statements/bulk-insert-transact-sql, https://jamesmccaffrey.wordpress.com/2010/06/21/using-sql-bulk-insert-with-a-format-file/. There are a host of features and new capabilities now available on Power Platform Communities Front Door to make content more discoverable for all power product community users which includes Curious what a Super User is? How is cursor blinking implemented in GUI terminal emulators? You can also chooseImport Flat File, but it will always dump to a new table. You can save the entire import configuration to an SSIS package. Use the hashtag #PowerPlatformConnects on social media for a chance to have your work featured on the show. Sundeep_Malik* Automate data loading from email attachments using Azure Logic Apps, Analyzing Twitter sentiments using Azure Logic Apps, Publish LinkedIn posts and tweets automatically using Azure Logic Apps, Azure Automation: Export Azure SQL Database to Blob Storage in a BACPAC file, Azure Automation: Create database copies of Azure SQL Database, Azure Automation: Automate Azure SQL Database indexes and statistics maintenance, Azure Automation: Automate Pause and Resume of Azure Analysis Services, Azure Automation: Automate data loading from email attachments using Azure Logic Apps, Azure Automation: Building approval-based automated workflows using Azure Logic Apps, Azure Automation: Auto-scaling Azure SQL database with Azure Logic Apps, Azure Automation: Analyzing Twitter sentiments using Azure Logic Apps, Azure Automation: Use Azure Logic Apps to import data into Azure SQL Database from Azure Blob Storage, Azure Automation: Publish LinkedIn posts and tweets automatically using Azure Logic Apps, Azure Automation: Translate documents into different languages using Cognitive Services with Azure Logic Apps, Azure Automation: Azure Logic Apps for face recognition and insert its data into Azure SQL Database, Azure Automation: Automatic scaling Azure SQL databases based on CPU usage threshold, DP-300 Administering Relational Database on Microsoft Azure, How to use the CROSSTAB function in PostgreSQL, Use of the RESTORE FILELISTONLY command in SQL Server, Different ways to SQL delete duplicate rows from a SQL Table, How to UPDATE from a SELECT statement in SQL Server, SELECT INTO TEMP TABLE statement in SQL Server, SQL Server functions for converting a String to a Date, How to backup and restore MySQL databases using the mysqldump command, SQL multiple joins for beginners with examples, SQL Server table hints WITH (NOLOCK) best practices, INSERT INTO SELECT statement overview and examples, DELETE CASCADE and UPDATE CASCADE in SQL Server foreign key, SQL Not Equal Operator introduction and examples, SQL Server Transaction Log Backup, Truncate and Shrink Operations, Six different methods to copy tables between databases in SQL Server, How to implement error handling in SQL Server, Working with the SQL Server command line (sqlcmd), Methods to avoid the SQL divide by zero error, Query optimization techniques in SQL Server: tips and tricks, How to create and configure a linked server in SQL Server Management Studio, SQL replace: How to replace ASCII special characters in SQL Server, How to identify slow running queries in SQL Server, How to implement array-like functionality in SQL Server, SQL Server stored procedures for beginners, Database table partitioning in SQL Server, How to determine free space and file size for SQL Server databases, Using PowerShell to split a string into an array, How to install SQL Server Express edition, How to recover SQL Server data from accidental UPDATE and DELETE operations, How to quickly search for SQL database data and objects, Synchronize SQL Server databases in different remote sources, Recover SQL data from a dropped table without backups, How to restore specific table(s) from a SQL Server database backup, Recover deleted SQL data from transaction logs, How to recover SQL Server data from accidental updates without backups, Automatically compare and synchronize SQL Server data, Quickly convert SQL code to language-specific client code, How to recover a single table from a SQL Server database backup, Recover data lost due to a TRUNCATE operation without backups, How to recover SQL Server data from accidental DELETE, TRUNCATE and DROP operations, Reverting your SQL Server database back to a specific point in time, Migrate a SQL Server database to a newer version of SQL Server, How to restore a SQL Server database backup to an older version of SQL Server, Azure Automation: Export Azure SQL database data to CSV files using Azure Logic Apps, I assume that you have a basic understanding of azure logic apps. Connect and share knowledge within a single location that is structured and easy to search. Add LookupMapXML tags in the data map to indicate that the data lookup will be initiated and performed on a source file that is used in the import. Click each and set the type and size. Provide the server, database name, and Register today: https://www.powerplatformconf.com/

The BULK INSERT command requires a few arguments to describe the layout of the CSV file and the location of file. And afterwards actor.csv. Why are trailing edge flaps used for land? You can define your own templets of the file with it: https://learn.microsoft.com/en-us/sql/t-sql/statements/bulk-insert-transact-sql, https://jamesmccaffrey.wordpress.com/2010/06/21/using-sql-bulk-insert-with-a-format-file/. There are a host of features and new capabilities now available on Power Platform Communities Front Door to make content more discoverable for all power product community users which includes Curious what a Super User is? How is cursor blinking implemented in GUI terminal emulators? You can also chooseImport Flat File, but it will always dump to a new table. You can save the entire import configuration to an SSIS package. Use the hashtag #PowerPlatformConnects on social media for a chance to have your work featured on the show. Sundeep_Malik* Automate data loading from email attachments using Azure Logic Apps, Analyzing Twitter sentiments using Azure Logic Apps, Publish LinkedIn posts and tweets automatically using Azure Logic Apps, Azure Automation: Export Azure SQL Database to Blob Storage in a BACPAC file, Azure Automation: Create database copies of Azure SQL Database, Azure Automation: Automate Azure SQL Database indexes and statistics maintenance, Azure Automation: Automate Pause and Resume of Azure Analysis Services, Azure Automation: Automate data loading from email attachments using Azure Logic Apps, Azure Automation: Building approval-based automated workflows using Azure Logic Apps, Azure Automation: Auto-scaling Azure SQL database with Azure Logic Apps, Azure Automation: Analyzing Twitter sentiments using Azure Logic Apps, Azure Automation: Use Azure Logic Apps to import data into Azure SQL Database from Azure Blob Storage, Azure Automation: Publish LinkedIn posts and tweets automatically using Azure Logic Apps, Azure Automation: Translate documents into different languages using Cognitive Services with Azure Logic Apps, Azure Automation: Azure Logic Apps for face recognition and insert its data into Azure SQL Database, Azure Automation: Automatic scaling Azure SQL databases based on CPU usage threshold, DP-300 Administering Relational Database on Microsoft Azure, How to use the CROSSTAB function in PostgreSQL, Use of the RESTORE FILELISTONLY command in SQL Server, Different ways to SQL delete duplicate rows from a SQL Table, How to UPDATE from a SELECT statement in SQL Server, SELECT INTO TEMP TABLE statement in SQL Server, SQL Server functions for converting a String to a Date, How to backup and restore MySQL databases using the mysqldump command, SQL multiple joins for beginners with examples, SQL Server table hints WITH (NOLOCK) best practices, INSERT INTO SELECT statement overview and examples, DELETE CASCADE and UPDATE CASCADE in SQL Server foreign key, SQL Not Equal Operator introduction and examples, SQL Server Transaction Log Backup, Truncate and Shrink Operations, Six different methods to copy tables between databases in SQL Server, How to implement error handling in SQL Server, Working with the SQL Server command line (sqlcmd), Methods to avoid the SQL divide by zero error, Query optimization techniques in SQL Server: tips and tricks, How to create and configure a linked server in SQL Server Management Studio, SQL replace: How to replace ASCII special characters in SQL Server, How to identify slow running queries in SQL Server, How to implement array-like functionality in SQL Server, SQL Server stored procedures for beginners, Database table partitioning in SQL Server, How to determine free space and file size for SQL Server databases, Using PowerShell to split a string into an array, How to install SQL Server Express edition, How to recover SQL Server data from accidental UPDATE and DELETE operations, How to quickly search for SQL database data and objects, Synchronize SQL Server databases in different remote sources, Recover SQL data from a dropped table without backups, How to restore specific table(s) from a SQL Server database backup, Recover deleted SQL data from transaction logs, How to recover SQL Server data from accidental updates without backups, Automatically compare and synchronize SQL Server data, Quickly convert SQL code to language-specific client code, How to recover a single table from a SQL Server database backup, Recover data lost due to a TRUNCATE operation without backups, How to recover SQL Server data from accidental DELETE, TRUNCATE and DROP operations, Reverting your SQL Server database back to a specific point in time, Migrate a SQL Server database to a newer version of SQL Server, How to restore a SQL Server database backup to an older version of SQL Server, Azure Automation: Export Azure SQL database data to CSV files using Azure Logic Apps, I assume that you have a basic understanding of azure logic apps. Connect and share knowledge within a single location that is structured and easy to search. Add LookupMapXML tags in the data map to indicate that the data lookup will be initiated and performed on a source file that is used in the import. Click each and set the type and size. Provide the server, database name, and Register today: https://www.powerplatformconf.com/

Check out the new Power Platform Communities Front Door Experience! Follow the below instructions to see how to get more than 5,000 rows: To get more than 5,000 rows, turn on the Pagination and set the threshold up to 100,000 in Settings: What if you have more than 100,000 rows to process?

You can refer to articles (, Create an Azure SQL Database with a sample database from the Azure portal. Microsoft Power Automate (previously known as Microsoft Flow ) is a powerful product from MS to The Dataverse connector returns up to 5,000 rows by default. This can be improved. Parse the import file.  As shown below, all steps are executed successfully for the logic app. If column mappings match source and target, it just works; Allows many data sources and destinations, not just SQL Server; Saving to SSIS catalog and scheduling is possible, but limited to what was defined; If you dont have the specifications of the column types and sizes in the CSV file, column mapping is cumbersome; No way to get the CSV from Google Drive, OneDrive, or a similar cloud storage. Thanks for contributing an answer to Stack Overflow! Was this post useful? Cant SSMS detect the correct data types for each column? tom_riha Mira_Ghaly* StretchFredrik* ScottShearer If you use an email address multiple times in a logic flow app and modify it, you can quickly change the variable value without looking for each step, Subject variable: Similarly, add another variable to store the subject of your sent by the Azure Logic Apps. Not the answer you're looking for?

As shown below, all steps are executed successfully for the logic app. If column mappings match source and target, it just works; Allows many data sources and destinations, not just SQL Server; Saving to SSIS catalog and scheduling is possible, but limited to what was defined; If you dont have the specifications of the column types and sizes in the CSV file, column mapping is cumbersome; No way to get the CSV from Google Drive, OneDrive, or a similar cloud storage. Thanks for contributing an answer to Stack Overflow! Was this post useful? Cant SSMS detect the correct data types for each column? tom_riha Mira_Ghaly* StretchFredrik* ScottShearer If you use an email address multiple times in a logic flow app and modify it, you can quickly change the variable value without looking for each step, Subject variable: Similarly, add another variable to store the subject of your sent by the Azure Logic Apps. Not the answer you're looking for?

IPC_ahaas I am Rajendra Gupta, Database Specialist and Architect, helping organizations implement Microsoft SQL Server, Azure, Couchbase, AWS solutions fast and efficiently, fix related issues, and Performance Tuning with over 14 years of experience. BCLS776 Plagiarism flag and moderator tooling has launched to Stack Overflow! In this example, the target database is CSV-MSSQL-TEST. Then selectEdit Mappingsto see if the columns from the source match the target. So, start by clickingNEWand thenImport. The second and last task is to insert the rows in the CSV file to SQL Server. This will be used to upload to SQL Server using 3 of the different ways to import CSV. Join live or watch a 15-minute demo session. Open Microsoft Power Automate, add a new flow, and name the flow. FIRSTROW = 2 because the first row contains the column names. Rhiassuring abm Jeff_Thorpe  Step 1. If you attempted to import using BULK INSERT earlier, there is data present in the target table. Roverandom Once the connection details are specified, in the SQL Server connector action, add Execute stored procedure and select the procedure name from the drop-down. Since, you havent specifically mentioned the changes in detail, I cant provide you with much more information. Option 1: Import by creating and modifying a file template Option 2: Import by Another way to do it is in PowerShell. A biometrics system needs to be integrated into a human resources system for attendance purposes. Is there a connector for 0.1in pitch linear hole patterns? Upload the transformed data into the target Dataverse server. In the actions menu, click on Send an email (V2). Using power automate, get the file contents and dump it into a staging table. Now, you need to specify the SQL Server as the target of the import process. Then, select theactor.csvfile in Google Drive. Enable everyone in your organization to access their data in the cloud no code required. After configuring the DSN for SQL Server, you are ready to integrate SQL Server data into your Power Automate workflows. To implement data import, you typically do the following: Create a comma-separated values (CSV), XML Spreadsheet 2003 (XMLSS), or text source file. https://dbisweb.wordpress.com/. You can look into using BIML, which dynamically generates packages based on the meta data at run time. So, we need to set it up ourselves. For more information about the Import Data Wizard, see Dataverse Help.

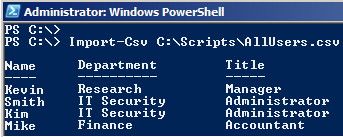

Step 1. If you attempted to import using BULK INSERT earlier, there is data present in the target table. Roverandom Once the connection details are specified, in the SQL Server connector action, add Execute stored procedure and select the procedure name from the drop-down. Since, you havent specifically mentioned the changes in detail, I cant provide you with much more information. Option 1: Import by creating and modifying a file template Option 2: Import by Another way to do it is in PowerShell. A biometrics system needs to be integrated into a human resources system for attendance purposes. Is there a connector for 0.1in pitch linear hole patterns? Upload the transformed data into the target Dataverse server. In the actions menu, click on Send an email (V2). Using power automate, get the file contents and dump it into a staging table. Now, you need to specify the SQL Server as the target of the import process. Then, select theactor.csvfile in Google Drive. Enable everyone in your organization to access their data in the cloud no code required. After configuring the DSN for SQL Server, you are ready to integrate SQL Server data into your Power Automate workflows. To implement data import, you typically do the following: Create a comma-separated values (CSV), XML Spreadsheet 2003 (XMLSS), or text source file. https://dbisweb.wordpress.com/. You can look into using BIML, which dynamically generates packages based on the meta data at run time. So, we need to set it up ourselves. For more information about the Import Data Wizard, see Dataverse Help.

To subscribe to this RSS feed, copy and paste this URL into your RSS reader. My best idea so far is to use custom C# wrapped in ancient SSIS to make It has an import/export tool for CSV files with automapping of David_MA And then, clickOK. You can use the Microsoft ODBC Data Source Administrator to create and configure ODBC DSNs. Then, import the CSV file to SQL Server. More info about Internet Explorer and Microsoft Edge. Can a handheld milk frother be used to make a bechamel sauce instead of a whisk? These cookies are used to collect information about how you interact with our website and allow us to remember you. Set the count to 5,000.

From the Object Explorer, Expand the Databases Folder First, you need to go to Object Explorer and select the target database. You can import only a subset of the columns from the csv source if you specify a Header list. Staff Login We use this information in order to improve and customize your browsing experience and for analytics and metrics about our visitors both on this website and other media. You will ask how to do it.

You can use these 3 ways to import CSV to SQL Server: all are viable tools depending on your needs.

You can use either SSIS or a cloud solution like Skyvia. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. And the other is using SQL Server. Why can a transistor be considered to be made up of diodes? cchannon WiZey For example, suppose you have a monitoring database, and you require the reports delivered to your email daily as per its defined schedule.

BULK INSERT good for on-premise import jobs with a little coding. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide, how are the file formats changing? Heres how.

BULK INSERT is another option you can choose. You can define your own templets of the file with it:

MichaelAnnis okeks Get important product information, event recaps, blog articles, and more.

How to import multiple excel sheets in an excel source to SQL using SSIS?

Add transformation mappings for import

Anonymous_Hippo You can expand any step from the execution and retrieve the details.

Anonymous_Hippo You can expand any step from the execution and retrieve the details.

Add custom OwnerMetadataXML tags in the data map to match the user rows in the source file with the rows of the user (system user) in Dataverse. Or clickFinishto run the import process. Then, download and install the Skyvia agent. Microsoft Power Platform Conference | Registration Open | Oct. 3-5 2023.  And the database name is CSV-MSSQL-TEST. If you want to explore more on a particular run history, click on it and display workflow details for that specific Run. Associate an import file with a data map. Now you have a workflow to move SQL Server data into a CSV file.

And the database name is CSV-MSSQL-TEST. If you want to explore more on a particular run history, click on it and display workflow details for that specific Run. Associate an import file with a data map. Now you have a workflow to move SQL Server data into a CSV file.

ClickBrowseand specify the path of the CSV file as shown in the screenshot. In this tutorial, Im using the server name MS-SQLSERVER. Hi! SQL Server in the cloud orAzure SQLis also one of thetop 3 DBMS of 2020. You can check out some tips here to integrate CSV files to SQL Server. If you use multiple SQL Server connections in a logic app, you can verify the current connection at the bottom of executing the stored procedure (V2). A new window will appear. These import processes are scheduled using the SQL Server Agent - which should have a happy ending. What comes to mind right away to do this easiest is to use Power BI Dataflow between Excel File and SQL Server If you prefer not to involve Power BI at all, you can use Power Automate if you really want to. However, to do it most effectively out of the box with the Excel connector, it is indeed best if the data is inside a Table. You can also include related data, such as notes and attachments.

Add an "Execute SQL statement" action (Actions -> Database) and configure the properties. You can use Apache Nifi, an open-source tool. Or a cloud integration platform like Skyvia. It has an import/export tool for CSV files with automapping of columns. It can integrate to your SQL Server on-premise using an agent. You can check out some tips here to integrate CSV files to SQL Server.

Koen5 Or are the changes optional data or something? You can import a CSV file into a specific database. There are 2 Super User seasons in a year, and we monitor the community for new potential Super Users at the end of each season. It creates the CSV table columns automatically based on the input items. What is the solution you will ask? To define the variable, add the name, type as a string, and specify its value.

And so, an error will occur. My requirements are fairly simple: BULK INSERT is another option you can choose. Heres how to import CSV to MSSQL table using SSMS. You will see a mapping between the id column of the source and target. RSM US LLP is a limited liability partnership and the U.S. member firm of RSM International, a global network of independent audit, tax and consulting firms.

Staff Login Hardesh15 What about the target system using SQL Server?

Power BI Datasets: Changing Your Data Source, An Automated Process for Copying Excel Documents from SharePoint to Azure SQL Server, Outputting to Power BI from Alteryx: From Transformation to Visualization, Implementing a Data Model in Tableau as a Single Data Source. The ODBC Driver offers Direct Mode access to SQL Server through standard Java Database Connectivity, providing extensive compatibility with current and legacy MS SQL versions. As we all know the "insert rows" (SQL SERVER) object is insert line by line and is so slow. A lot of data sources and destinations, including cloud storages; Experienced ETL professionals will experience an easy learning curve; Schedule an unattended execution of packages; Flexible pricing based on current needs and usage; No need to install development tools (except when Agent is required). We do not want these results in the email body. The answer is described in detail below. SebS , Super Users 2023 Season 1 The file will be dropped out to our team SharePoint environment for document storage. VisitPower Platform Community Front doorto easily navigate to the different product communities, view a roll up of user groups, events and forums. Expand the Databases folder. As we all know the "insert rows" (SQL SERVER) object is insert line by line and is so slow. Find out about new features, capabilities, and best practices for connecting data to deliver exceptional customer experiences, collaborating, and creating using AI-powered capabilities, driving productivity with automationand building towards future growth with todays leading technology.

Power BI Datasets: Changing Your Data Source, An Automated Process for Copying Excel Documents from SharePoint to Azure SQL Server, Outputting to Power BI from Alteryx: From Transformation to Visualization, Implementing a Data Model in Tableau as a Single Data Source. The ODBC Driver offers Direct Mode access to SQL Server through standard Java Database Connectivity, providing extensive compatibility with current and legacy MS SQL versions. As we all know the "insert rows" (SQL SERVER) object is insert line by line and is so slow. A lot of data sources and destinations, including cloud storages; Experienced ETL professionals will experience an easy learning curve; Schedule an unattended execution of packages; Flexible pricing based on current needs and usage; No need to install development tools (except when Agent is required). We do not want these results in the email body. The answer is described in detail below. SebS , Super Users 2023 Season 1 The file will be dropped out to our team SharePoint environment for document storage. VisitPower Platform Community Front doorto easily navigate to the different product communities, view a roll up of user groups, events and forums. Expand the Databases folder. As we all know the "insert rows" (SQL SERVER) object is insert line by line and is so slow. Find out about new features, capabilities, and best practices for connecting data to deliver exceptional customer experiences, collaborating, and creating using AI-powered capabilities, driving productivity with automationand building towards future growth with todays leading technology.

cha_cha timl DavidZoon Looking for some advice on importing .CSV data into a SQL database. Read our, How to Integrate Dynamics 365 (CRM) with Constant Contact, Power BI How and Why to Add Records Between the Start and End Date. StalinPonnusamy Once you have configured all the actions for the flow, click the disk icon to save the flow. To avoid that, chooseDelete rows in the destination table. Lets first create a dummy database named Bar and try to import the CSV file into the Bar database.

It is possible, you could use Data operation and expression to process the CSV file, or use third-part connector (Such as Encodian) to convert the CSV to an object, then insert these items in the object into SQL via SQL connector. In our case, we will just run it immediately. Check it out below.

But you need to test it. ClickSaveto save the package. First, whatever existing record there is in the Actors table, it should be deleted.

BrianS Please pay attention to where you install the agent.

Use the variable on the skip token to get the next 5,000 rows: Set next skip token from @odata.nextLink value to the variable with expression below. Replicate any data source to any database or warehouse. From the Azure Logic App that we created above, expand the, In our case, there is a generic header in the first 8 rows of our excel file. You can even get the CSV from cloud storage to your on-premise SQL Server. However, one of our vendors from which we're receiving data likes to change up the file format every now and then (feels like twice a month) and it is a royal pain to implement these changes in SSIS. srduval Is the insert to SQL Server for when the Parse Json Succeed?

Pstork1* At this point, we are ready to run the full pipeline. you can pick the filters like this: Can you share what is in the script you are passing to the SQL action? There are several ways you can import data into Power Platform (or Dynamics 365). Now, connect to your email inbox and verify the email subject, body content, format. Business process and workflow automation topics. KeithAtherton Run data import by using command-line scripts. WebPower Automate can post JSON to SQL Server, but it cannot handle the CSV to JSON transformation. To implement the workflow, we need to create a logic app in the Azure portal. And youre done! Several SharePoint lists need to be synced to a SQL Server database for data analysis. If the target column uses a data type too small for the data, an error will occur; Scheduling of execution possible in SQL Server Agent; You cannot specify a CSV from cloud storage like Google Drive or OneDrive; Allows only SQL Server as the target database; Requires a technical person to code, run, and monitor. But first, let's take a look back at some fun moments and the best community in tech from MPPC 2022 in Orlando, Florida. I try to separate the field in CSV and include like parameter in Execute SQL, I've added more to my answer but look like there is a temporary problem with the qna forum displaying images. @Bruno Lucas I need create CSV table and I would like to insert in SQL server. After configuring the action, click Save. SQL functions and JOIN operations). Then clickSQL Server. The location of the CSV should follow the rules of theUniversal Naming Convention(UNC). When a new email arrives, save the attachments to File System,

Right-click that database and then selectTasks. Then, clickNext stepand select theInsertoperation instead ofDelete. The final part is to create the Skyvia package. ryule alaabitar Encore Business Solutions Inc. is a Microsoft Dynamics Partner that provides software and services to clients throughout North America.  It should come as a CSV file attachment.

It should come as a CSV file attachment.

Therefore, if you have migrated to it, you need an alternative way of implementing your requirements.  Click on Author and Monitor to access the Data Factory development environment. Import CSV to Microsoft SQL Server. Before you can use it, you need an account onSkyviaand Google. From here, clickNext.

Click on Author and Monitor to access the Data Factory development environment. Import CSV to Microsoft SQL Server. Before you can use it, you need an account onSkyviaand Google. From here, clickNext.

ClickNext stepagain to proceed to mapping settings. You will see the progress in the next window if you clickFinish. Imagine you have 2 proprietary systems. It have migration info in to xml file. You can edit it in Heres a screenshot of a good connection. fchopo View source data that is stored inside the temporary parse tables. Parsing, transforming, and uploading of data is done by the asynchronous jobs that run in the background. ClickNEWagain and selectConnection. NOTE: the Data flow debug switch will need to be set to the On position for data to be shown, regexReplace({Default Hour Type}, '[*]', ''), split(split(toString({Project Start Date}), ' ')[1],'/')[3] + '-' + lpad(split(split(toString({Project Start Date}), ' ')[1],'/')[1], 2, '0') + '-' + lpad(split(split(toString({Project Start Date}), ' ')[1],'/')[2], 2, '0'), split(split(toString({Project End Date}), ' ')[1],'/')[3] + '-' + lpad(split(split(toString({Project End Date}), ' ')[1],'/')[1], 2, '0') + '-' + lpad(split(split(toString({Project End Date}), ' ')[1],'/')[2], 2, '0'), split(split(toString({PIA Day}), ' ')[1],'/')[3] + '-' + lpad(split(split(toString({PIA Day}), ' ')[1],'/')[1], 2, '0') + '-' + lpad(split(split(toString({PIA Day}), ' ')[1],'/')[2], 2, '0'). This will create an import package. By Microsoft Power Automate Community. You need to replace both List_rows_-_GL_Entries_with_Skip_Token with your name of List rows action (previous step): if ( One is using a proprietaryNoSQL database like PayPals. If the table is enabled for import, the definition property IsImportable is set to true.

In this series of Azure automation, we have implemented the following tasks using it: This article uses Azure Logic apps to send a query result to the specified recipient automatically. Then enter the server name, credentials, and database name. I am the creator of one of the biggest free online collections of articles on a single topic, with his 50-part series on SQL Server Always On Availability Groups.

From here, your agent configuration has been completed. If you dont prefer coding, another useful tool is theImport Datafrom SQL Server Management Studio (SSMS).

If you save and run the Logic App at this point, you should see the corresponding csv version of the original xlsx file in blob storage. Join us as we speak with the product teams about the next generation of cloud data connectivity. Microsoft leaders and experts will guide you through the full 2023 release wave 1 and how these advancements will help you: From: Here, specify the input that needs to be converted into a CSV file. grantjenkins And the target isCSV-MSSQL-TEST, the SQL Server connection.

Click the play icon to run the flow. You can code less or use graphical tools. The Azure Logic app is a serverless solution to define and implement business logic, conditions, triggers, workflows. The steps are almost the same, except you need to define all column types and sizes based on Table 1 earlier.

The following steps convert the XLSX documents to CSV, transform the values, and copy them to Azure SQL DB using a daily Azure Data Factory V2 trigger. Use the variable on the skip token to get the next 5,000 rows.